David A Notes YOUR OFFWORLD INVESTMENTS IN ARTIFICIAL DUMBNESS PAID $? IN DIVIDENDS

Self-explainer: privacy-preserving contact tracing

Self-explainer: experimenting with taking my usual rough notes on various topics of interest and posting only very slightly nicer versions of them publicly.

Context and problem

Way back in the first few weeks of the Bay Area pandemic shelter-in-place orders, I happened upon an interesting tweet from Carmela Troncoso, a security professor at EPFL. The thread was about a decentralized project for contact tracing called DP-3T. I read the simplified 3-page brief, found it pretty interesting, and bookmarked the longer white paper to read later. A week later (!), the news was abuzz with stories about the Google and Apple’s joint project to bring a similar technology to their mobile devices.

When someone is diagnosed with the target infectious disease (eg, COVID-19), public health officials would like to identify everyone who has recently been in close physical proximity to the diagnosed person in order to take protective actions to minimize further spread. The key problem here is identifying this set of potentially affected people, known as contact-tracing. The decentralized privacy-preserving variant of this problem is, to a first approximation, how to achieve this goal without simply building a massive central database that tracks everyone’s whereabouts at all times, a solution which has its own risks and drawbacks.

The key trick: ephemeral identifiers

So if Alice has been diagnosed with disease X, how can we identify the set of people who may have been inadvertently exposed to infection via their proximity to her over the past several days? Remember that we don’t want a centralized log of GPS coordinates, or a real-identity snapshot of your personal connections.

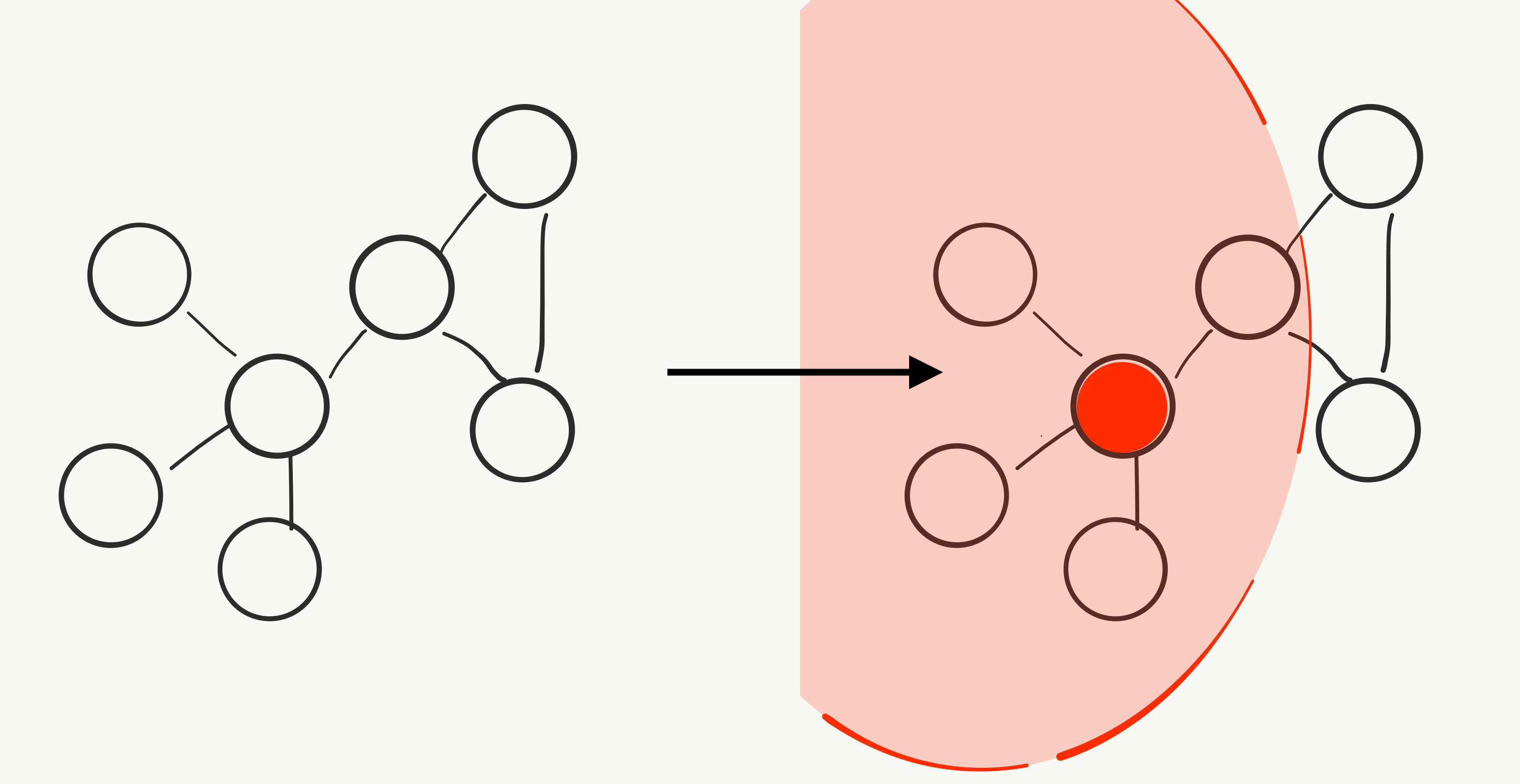

The key trick is the use of ephemeral identifiers, a private stream of codes generated by each installed copy of the app, similar to 2-factor authentication (TFA) apps like Google Authenticator. An informal simplified caricature (since writing this the project itself has a similar nice cartoon summarizing it as well) of the mechanism is as follows:

-

Everywhere Alice goes, her phone is constantly broadcasting her current ephemeral code, which changes at some regular intervals (eg, hourly). Her app locally (ie, on-device) stores a timestamped list of all of its generated codes, and everyone else’s copy of the app is doing the same.

-

Every copy of the app is also constantly listening for codes, and locally (again) recording timestamped records every one that it “sees”. This data acts as a kind of anonymized and device-local contact history.

-

If Alice is diagnosed with the disease, her doctor publishes (with her consent) the list of her codes from the past several days to some accessible location with some annotation indicating people who were in contact with these codes are at risk. Everyone else’s copy of the app can periodically poll this source and compare the published list of “at-risk” ephemeral identifiers against their local copy of observed identifiers. If there is a match, the user knows they may have been exposed and can take some action (getting tested, self-quarantine, etc).

This solution seems like it should satisfy the core functional requirement: identifying users who were in close physical proximity to later-diagnosed individuals (assuming everyone is using the app at all times). Proving the desired privacy properties is a more subtle and complex question, and also depends crucially on careful attention to implementation details. However, at a high level it seems plausible that, assuming the identifiers do not “leak” information, the design does a reasonable job of limiting the utility of the system for unintended or malicious use cases like ad targeting or political dissident hunting.

Additional details and complications

The materials posted on the DP-3T Github are quite interesting and get into deeper details of the practical and legal aspects of the system. For example, the caricature above is not strictly accurate - infected users actually upload an ephemeral ID-generating seed (which is itself ephemeral!) instead of their raw ephemeral IDs (see FAQ).

From the perspective of (especially European) privacy regulations and principles, one key claim is that the ephemeral ID-based design ensures that:

… from the server’s perspective, the data held is effectively not personal data, and cannot be linked back to individuals during normal operation.

That is, in some sense any installed copy of the app is (by design) a “dumb” ephemeral ID publisher and receiver, no more and no less.

Some other interesting questions explored are:

- how can user privacy be compromised if a user’s phone is physically confiscated?

- how to avoid users spamming/DoS’ing the system with false diagnoses?

- how could a user opt-in to sharing additional granular information that epidemiologists would find useful?

- what are the back-of-the-envelope scale requirements for the backend that publishes the infected ephemeral IDs (eg, queries per second)?

Will it work?

The effectiveness of this kind of effort would depend on

- maximizing participation/adoption/coverage

- minimizing “fragmentation” - everyone should be using the same system (at least within a geographical area)

- actual actions taken in response to the contact-tracing information

In some jurisdictions the government can more or less impose app adoption by fiat, while in others users will have to be persuaded to voluntarily participate. Public-private partnerships with influential commercial entities (say, Google+Apple) might be one way to achieve this. Likewise, the immediate actions triggered by the risk signals will vary depending on local political and legal conditions.

When rolled out, how would the efficacy of these solutions be measured? In the absence of randomized controlled trials, what kinds of data analysis techniques could researchers use to estimate the influence of these kinds of systems on the the all-important $R_t$ parameters?

Written on April 26th, 2020 by David M. Andrzejewski